- #Quickbuild splunk logging how to

- #Quickbuild splunk logging code

- #Quickbuild splunk logging free

- #Quickbuild splunk logging windows

Connect to platforms such as Elasticsearch or Azure Sentinel via their APIs.That’s a great question! What if I told you that you could: Then, why do I need Jupyter Notebooks? 🤔

That means that if you are using any of those tools to query security events, you can easily get a sigma rule converted to their format and run it.

#Quickbuild splunk logging how to

If you are wondering how to get that information, you can get it by running this sigmac script with the following flag: sigmac -l

#Quickbuild splunk logging windows

Let’s take a look at this sigma rule: sysmon_wmi_event_subscription.yml title: WMI Event Subscription id: 0f06a3a5-6a09-413f-8743-e6cf35561297 status: experimental description: Detects creation of WMI event subscription persistence method references: - tags: - attack.t1084 - attack.persistence author: Tom Ueltschi date: 2 logsource: product: windows service: sysmon detection: selector: EventID: - 19 - 20 - 21 condition: selector falsepositives: - exclude legitimate (vetted) use of WMI event subscription in your network level: high What can I do with it?Īccording to Sigma’s Sigma Converter (Sigmac) documentation, as of, you can translate rules to the following query formats: Writing an Interactive Book 📖 over the Threat Hunter Playbook 🏹 with the help of the Jupyter Book Project 💥.What the HELK? Sigma integration via Elastalert.I highly recommend to read a few of my previous blog posts to get familiarized with some of the concepts and projects I will be talking about in this one: In this post, I will show you how I translated every rule from the Sigma project to Elasticsearch query strings with the help of sigmac, created Jupyter notebooks for each rule with a python library named nbformat and finally added them to the HELK project to execute them against Mordor datasets.

#Quickbuild splunk logging code

It sounded very easy to do at first, but I had no idea how to create notebooks from code or how I was going to execute sigma rules on the top of them.

#Quickbuild splunk logging free

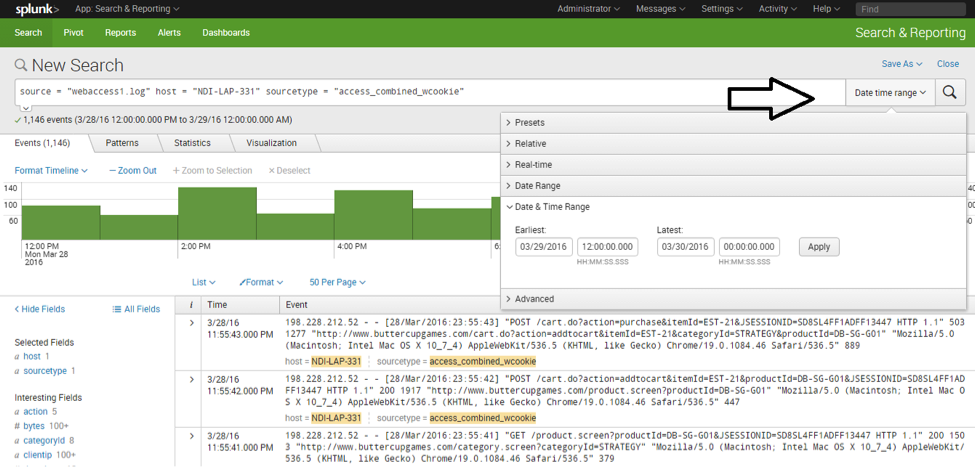

R = requests.post(self.Happy new year everyone 🎊! I’m taking a few days off before getting back to work and you know what that means 😆 Besides working out a little bit more and playing with the dogs, I have some free time to take care of a few things in my to-do list for open source projects or ideas 😆🍻 One of them was to find a way to integrate Jupyter Notebooks with the SIGMA project. First I created a file loggingsetup.py where I configured my python loggers with dictConfig: LOGGING = Does anybody have an idea what's wrong with my code?Īfter hours of figuring out what eventually could be wrong with my code, I now have a result that satisfies me. When activating debug on splunk handler (set to True), the splunk handler logs out that there is no payload available as already posted above. # splunk_tLevel(os.getenv('LOGGING_LEVEL', 'DEBUG')) # splunk_tFormatter(logging.BASIC_FORMAT) # port=os.getenv('SPLUNK_HTTP_COLLECTOR_PORT'), # host=os.getenv('SPLUNK_HTTP_COLLECTOR_URL'), Here I connect to the setup_logging signal from celery config_loggers(*args, **kwags): Splunk_tLevel(os.getenv('LOGGING_LEVEL', 'DEBUG'))Ĭelery initialisation (not sure, if worker_hijack_root_logger needs to be set to False.) app = Celery('name_of_the_application', broker=CELERY_BROKER_URL) Port=os.getenv('SPLUNK_HTTP_COLLECTOR_PORT'),

Host=os.getenv('SPLUNK_HTTP_COLLECTOR_URL'), This is how I set up the logger at the beginning of the file logger = logging.getLogger(_name_) I tried several things, including connecting the setup_logging signal from celery to prevent it to overwrite the loggers or setting up the logger in this signal. How do I set up the loggers correctly, so that all the logs go to the splunk_handler?Īpparently, celery sets up its own loggers and overwrites the root-logger from python. Timer thread executed but no payload was available to send But if I run it together with celery there seem to be no logs that reach the splunk_handler. If I run the splunk_handler without celery locally, it seems to work. I am trying to use this splunk_handler library. I want to send my logs directly from the script to splunk. I have a python script running on a server, that should get executed once a day by the celery scheduler.

0 kommentar(er)

0 kommentar(er)